If things work in this community, they will work elsewhere.Physicist Kira Burt ’11, BS physics, only dreamed that one day she’d be working at the largest, longest-running collaborative science experiment in the world. "It's the most anarchic test case you can imagine. "The idea is 8,000 scientists around the world should be able to access the data from their own labs, using all kinds of computer and system technologies," he said. Grey sees benefits arising from the interoperability stress test of making the system work for many differently-minded users. "If we can scale up to this 10 perabyte level that they have as their goal, it will be a good test for Storage Tank," he said. While smashing subatomic particles together may not seem like a great business proposition, there are other applications of considerable economic importance that involve the scientific study of similarly large data sets, such as the analysis of seismological data for oil exploration, Carpenter said.

EUROPEAN SUPERCOLLIDER SOFTWARE

The Storage Tank client software will work with the Windows, AIX, Solaris and Linux operating systems, according to the Web site for IBM's research center in Almaden, Calif., where Storage Tank is being developed. The system runs principally on Linux, but the idea is to make the software more widely available than that, particularly the client software needed to integrate with the local filing system, he said.

This implementation of Storage Tank will use the iSCSI storage-area network protocol, running over 10G-bit/sec Ethernet, but, "The way Storage Tank is designed, it could be over any SAN in the back end," Carpenter said. IBM plans to use the project as a testbed for this storage virtualization and file management technology, which it says will play a pivotal role in its work with CERN.

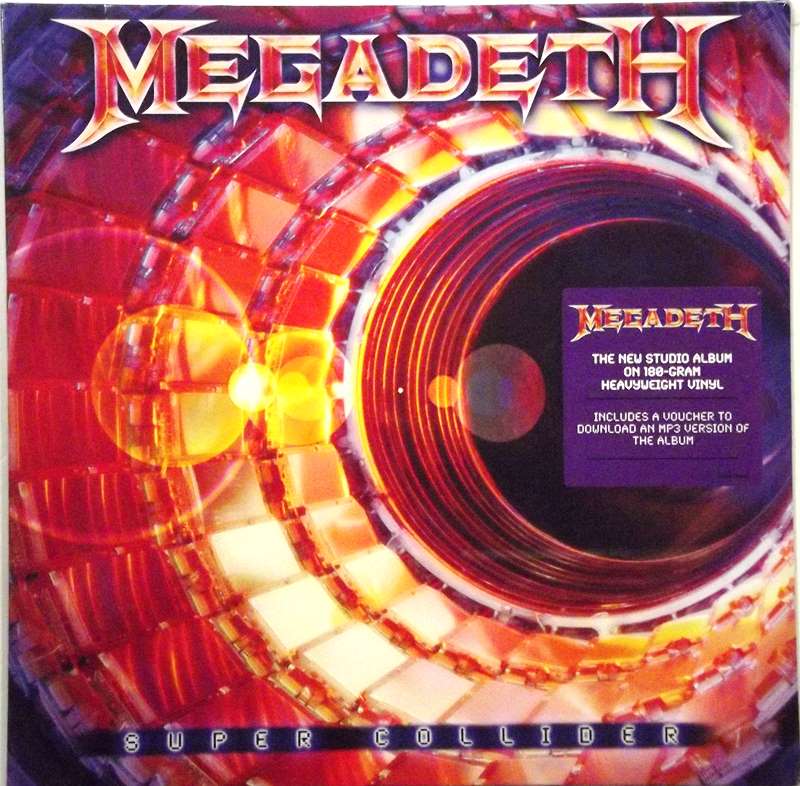

EUROPEAN SUPERCOLLIDER DOWNLOAD

Network clients ask the servers where to find the data they want, then download it straight from the network storage devices where it is located - rather like the way the Internet's DNS points clients towards hosts, but doesn't intervene in the transfer of data from them, Carpenter said. Storage Tank uses metadata servers to keep track of where data is located. When you have these quantities of data, managing and organizing them is a problem," said Brian Carpenter, distinguished engineer at IBM Systems Group. "It's really out of the scope of traditional network-attached storage. With the collider generating 100M bytes of data per second in operation, the data management task is huge. Comparing these with the petabytes of data gathered from experimental observations will enable scientists to test their models. The data for those tests will come from simulations of hadron collisions based on current theories.

That 20 terabytes of storage is a long way from the volume that CERN ultimately envisages, but the goal is to bring in more storage progressively, so as to conduct tests with around a petabyte of storage by 2005, Grey said. The equipment will be delivered by the end of the year. Enterasys donated a 10G bit/sec Ethernet network to connect them, and agreed to provide engineering assistance and product and technology forums for a total investment it valued at $1.5 million.įor its part, IBM will supply 20 terabytes (20,000G bytes) of disk storage, a cluster of six eServer xSeries systems running Linux, and onsite engineering support to a total value of $2.5 million, it announced Wednesday. HP contributed a 32-node cluster of computers built around Intel's Itanium 2 processors. The first two industrial sponsors, HP and Enterasys Networks, joined the effort last September. It's also an opportunity for CERN's industrial partners to test their technology in real-world applications, he added. "We are investigating techniques that are not yet commercial but will be by the time LHC is up and running," he said. "The drive is to get a working grid up that can deal with the petabytes of data coming out of the LHC by 2007," said François Grey, CERN openlab development officer.

0 kommentar(er)

0 kommentar(er)